Authors:

(1) Yelena Mejova, ISI Foundation, Italy;

(2) Arthur Capozzi, Università degli Studi di Torino, Italy;

(3) Corrado Monti, CENTAI, Italy;

(4) Gianmarco De Francisci Morales, CENTAI, Italy.

Table of Links

5 DISCUSSION

The results of this study have important implications within the theoretical frameworks outlined in Section 2. The international reach of the content studied here proves the efficacy of using Twitter for a communication campaign, especially if it aims to reach Western countries where the platform’s penetration is substantial. Content that contains clear narratives proves to be especially popular, and in particular, tweets with a victim narrative receive double the number of retweets. Using multimedia to advocate a victim’s position may be especially effective, as visual depictions

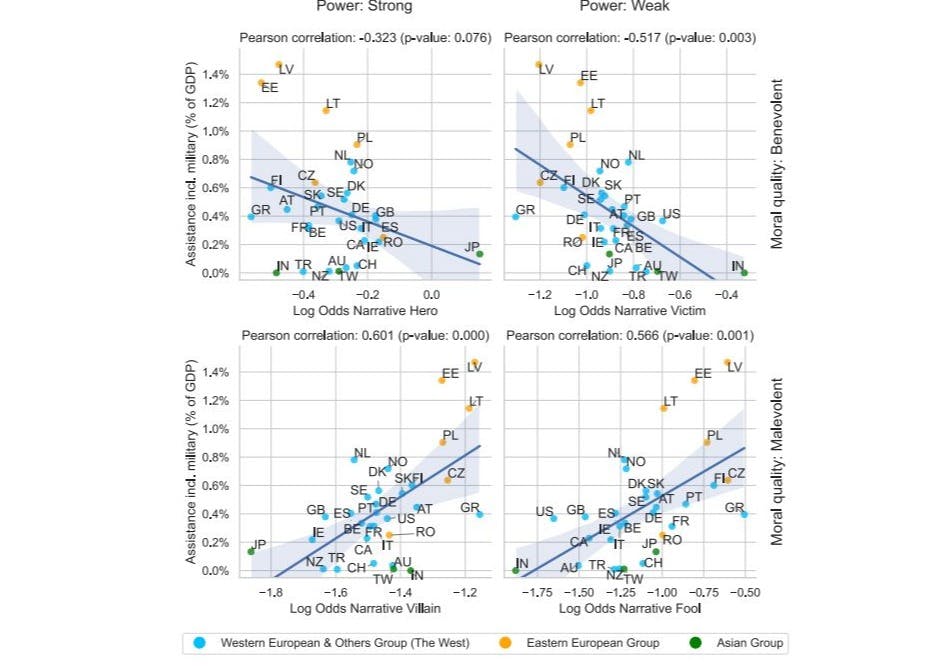

of people in need are known to activate brain circuits associated with facial and affective processing, which can compel people to give more help to strangers [24]. As social media is increasingly used to mobilize support and ask for charitable giving [9, 65], narrative emphasis may be an important variable to control in communication campaigns. Interestingly, the preference for the victim narrative by the overall audience captured here does not translate to the actions of countries, as it is negatively associated with per-capita financial assistance to Ukraine. Instead, countries that contribute more to Ukraine’s war effort resonate more with malevolent narratives: villain and fool. This phenomenon can be understood by considering that their support for Ukraine might be driven by their view of Russia as a threat, given their shared history and physical proximity. Indeed, soon after the invasion, Finland has reversed a long-time policy of neutrality and applied for membership to NATO on 18 May 2022, which was granted on 4 April 2023. In this sense, the propagation of the content posted by the examined Ukrainian accounts reflects politically relevant signals.

Another theoretical lens is that of identity building through narratives—a process that has been studied widely by the CHI community. For instance, Das and Semaan [15] postulate that using a social media platform as a space to address colonial grievances and build a self-concept fosters a “narrative resilience”, which serves as a “collective and reflexive mechanism through which people work to generate resilience”. During the annotation process, we encountered many examples of Laenui [38]’s decolonization phases, including mourning, commitment, and calls to action, and yet other emphases may be present as the conflict develops and hopefully ends (although applying the decolonization lens to the Russo-Ukrainian conflict is controversial). Further, narrative building has been used to engage marginalized communities [50], strengthen their voice [27], and promote conflict reconciliation [60]—tasks that may become important towards the end of the conflict. Recently, social media narratives have been shown to provide Ukrainian refugees a way to maintain an “emotional, dynamic, and constantly updating” bond with their homeland, and a space for the “negotiation and performance of ethnic and national identities” [37]. Although qualitative analysis of identity building was not a focus of the present study, examining the narratives that establish the self-concept of Ukraine as an independent state, and its relationship with Russia, the West, and the rest of the world, is an important future research direction.

The insights of this study are limited by its unique setting: the time period of the Russian invasion, the selection of the accounts, the peculiar affordances the Twitter platform presents, and the uneven adoption of the platform around the world. Nevertheless, our finding that users from countries that support Ukraine the most also retweet the content from major Ukrainian accounts at a higher rate suggests a synchrony between the supportive actions of Twitter users (which could be considered to be a form of slacktivism [40]) and concrete financial decisions by the governments of their countries. Conversely, the responses to the Eurobarometer survey question on sending help to Ukraine had no significant correlation with the propagation of the Ukrainian tweets, which suggests that users on Twitter are more “in tune” with the decisions of their governments than a representative population sample (indeed, the responses in the Eurobarometer are not correlated to financial aid for the 19 European countries in our data). It is possible that the retweeters include government entities and actors, as well as automated accounts. Unfortunately, we are unable to apply the standard bot detection techniques [16] as the platform closed down its API in early 2023. However, the effect of such accounts should be limited in a retweet analysis as each account may retweet a post once at most. Further, although we strove to identify the narratives from the points of view of the posters, some subjective labels might have been affected by the opinions of the authors (see Positionality Statement below). We hope sharing this data will allow for reproducibility and an easier re-examination of the narrative extraction process.

As the content analyzed here was produced during wartime, special care must be taken in an ethical analysis of the potentially sensitive material it contains, despite being published by verified accounts. For instance, @DefenceU posts a variety of reporting from the battlefields, including active war situations involving the use of artillery, images of combatants, and distressing images of affected civilians. We assume that the posting accounts have obtained sufficient permissions to post images of individuals, and have performed intelligence analysis to make sure no important information is being disclosed. Nonetheless, in this manuscript, we endeavored not to use any specific names or locations. Further, by its very nature, this data contains images of and information on vulnerable populations (including children), some of which are affected by the conflict. Special care must be taken that their images and other contexts are not misused and that any harm is minimized in subsequent analyses.

Positionality Statement

All authors reside in “the West”, and one was born in Russia. Although it is our aim to conduct the analysis as objectively as possible, this positionality is likely to affect some interpretation of the results. We make the data and annotations available for the examination of the research community, and possible alternative interpretations.

REFERENCES

[1] Substance Abuse. 2018. Mental Health Services Administration.(2014). SAMHSA’s concept of trauma and guidance for a trauma-informed approach. HHS Publication No.(SMA) 14-4884. Substance Abuse and Mental Health Services Administration (2018), 1–27. (Cited on 5)

[2] Brandon C Boatwright and Andrew S Pyle. 2023. “Don’t Mess with Ukrainian Farmers”: An examination of Ukraine and Kyiv’s official Twitter accounts as crisis communication, public diplomacy, and nation building during Russian invasion. Public Relations Review 49, 3 (2023), 102338. (Cited on 4)

[3] Lisa Bogerts and Maik Fielitz. 2019. Do You Want Meme War? Post-Digital Cultures of the Far Right: Online Actions and Offline Consequences in Europe and the US (2019), 137–153. https://doi.org/10.14361/9783839446706 (Cited on 4)

[4] Göran Bolin and Per Ståhlberg. 2023. Nation branding vs. nation building revisited: Ukrainian information management in the face of the Russian invasion. Place Branding and Public Diplomacy 19, 2 (2023), 218–222. (Cited on 3)

[5] Kenneth J Boyte. 2017. An analysis of the social-media technology, tactics, and narratives used to control perception in the propaganda war over Ukraine. Journal of Information Warfare 16, 1 (2017), 88–111. (Cited on 3)

[6] Vikram Kamath Cannanure, Delvin Varghese, Cuauhtémoc Rivera-Loaiza, Faria Noor, Dipto Das, Pranjal Jain, Meiyin Chang, Marisol Wong-Villacres, Naveena Karusala, Nova Ahmed, Sarina C Till, Bernard Ijesunor Akhigbe, Melissa Densmore, Susan Dray, Christian Sturm, and Neha Kumar. 2023. HCI Across Borders: Towards Global Solidarity. In Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems (CHI EA ’23). Association for Computing Machinery, New York, NY, USA, Article 351, 5 pages. https://doi.org/10.1145/3544549.3573806 (Cited on 5)

[7] Arthur Capozzi, Gianmarco De Francisci Morales, Yelena Mejova, Corrado Monti, André Panisson, and Daniela Paolotti. 2021. Clandestino or Rifugiato?Anti-Immigration Facebook Ad Targeting in Italy (CHI ’21). Association for Computing Machinery, New York, NY, USA, Article 179, 15 pages. https://doi.org/10.1145/3411764.3445082 (Cited on 5)

[8] Viktor Chagas, Fernanda Freire, Daniel Rios, and Dandara Magalhães. 2019. Political memes and the politics of memes: A methodological proposal for content analysis of online political memes. First Monday (2019). (Cited on 6)

[9] Cassandra M Chapman, Morgana Lizzio-Wilson, Zahra Mirnajafi, Barbara M Masser, and Winnifred R Louis. 2022. Rage donations and mobilization: Understanding the effects of advocacy on collective giving responses. British Journal of Social Psychology 61, 3 (2022), 882–906. (Cited on 17)

[10] Emily Chen and Emilio Ferrara. 2023. Tweets in time of conflict: A public dataset tracking the twitter discourse on the war between Ukraine and Russia. In Proceedings of the International AAAI Conference on Web and Social Media, Vol. 17. 1006–1013. (Cited on 3)

[11] W Timothy Coombs. 2021. Ongoing crisis communication: Planning, managing, and responding. Sage publications. (Cited on 3)

[12] Nicholas J Cull, David H Culbert, and David Welch. 2003. Propaganda and mass persuasion: A historical encyclopedia, 1500 to the present. Bloomsbury Publishing USA. (Cited on 12)

[13] Guilherme Ghisoni da Silva. 2021. Memes war: The Political Use Of Pictures In Brazil 2019. Philosophos - Revista de Filosofia 25, 2 (2021). https://doi.org/10.5216/phi.v25i2.64490 (Cited on 4)

[14] Maxime Dafaure. 2020. The “great meme war:” The alt-right and its multifarious enemies. Angles. New Perspectives on the Anglophone World 10 (2020). (Cited on 4)

[15] Dipto Das and Bryan Semaan. 2022. Collaborative identity decolonization as reclaiming narrative agency: Identity work of Bengali communities on Quora. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. 1–23. (Cited on 18)

[16] Clayton Allen Davis, Onur Varol, Emilio Ferrara, Alessandro Flammini, and Filippo Menczer. 2016. Botornot: A system to evaluate social bots. In Proceedings of the 25th international conference companion on world wide web. 273–274. (Cited on 18)

[17] Richard Dawkins. 1976. The selfish gene. (Cited on 4)

[18] Constance de Saint Laurent, Vlad P Glăveanu, and Ioana Literat. 2021. Internet memes as partial stories: Identifying political narratives in coronavirus memes. Social Media+ Society 7, 1 (2021), 2056305121988932. (Cited on 2, 3, 4, 6)

[19] Rachael Dietkus. 2022. The Call for Trauma-Informed Design Research and Practice. Design Management Review 33, 2 (2022), 26–31. (Cited on 5)

[20] Charmaine Du Plessis. 2018. Social media crisis communication: Enhancing a discourse of renewal through dialogic content. Public relations review 44, 5 (2018), 829–838. (Cited on 3)

[21] Marta Dynel and Fabio Indìo Massimo Poppi. 2021. Caveat emptor: Boycott through digital humour on the wave of the 2019 Hong Kong protests. Information, Communication & Society 24, 15 (2021), 2323–2341. (Cited on 4)

[22] European Comission. 2023. Standard Eurobarometer STD98. https://data.europa.eu/data/datasets/s2872_98_2_std98_eng?locale=en [Accessed 20-06-2023]. (Cited on 6)

[23] Dominique Geissler, Dominik Bär, Nicolas Pröllochs, and Stefan Feuerriegel. 2022. Russian propaganda on social media during the 2022 invasion of Ukraine. arXiv preprint arXiv:2211.04154 (2022). (Cited on 4)

[24] Alexander Genevsky, Daniel Västfjäll, Paul Slovic, and Brian Knutson. 2013. Neural underpinnings of the identifiable victim effect: Affect shifts preferences for giving. Journal of Neuroscience 33, 43 (2013), 17188–17196. (Cited on 17)

[25] Jeff Giesea. 2015. It’s time to embrace memetic warfare. Defence Strategic Communications 1, 1 (2015), 67–75. (Cited on 1)

[26] Viveca S Greene. 2019. “Deplorable” satire: Alt-right memes, white genocide tweets, and redpilling normies. Studies in American Humor 5, 1 (2019), 31–69. (Cited on 4)

[27] Brett A Halperin, Gary Hsieh, Erin McElroy, James Pierce, and Daniela K Rosner. 2023. Probing a Community-Based Conversational Storytelling Agent to Document Digital Stories of Housing Insecurity. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–18. (Cited on 18)

[28] Lene Hansen. 2011. Theorizing the image for security studies: Visual securitization and the Muhammad cartoon crisis. European journal of international relations 17, 1 (2011), 51–74. (Cited on 4)

[29] Margot Hare and Jason Jones. 2023. Slava Ukraini: Exploring Identity Activism in Support of Ukraine via the Ukraine Flag Emoji on Twitter. Journal of Quantitative Description: Digital Media 3 (2023). (Cited on 4)

[30] Maxine Ed Harris and Roger D Fallot. 2001. Using trauma theory to design service systems. Jossey-Bass/Wiley. (Cited on 5)

[31] Juan Pablo Hourcade and Natasha E. Bullock-Rest. 2011. HCI for Peace: A Call for Constructive Action. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’11). Association for Computing Machinery, New York, NY, USA, 443–452. https://doi.org/10.1145/ 1978942.1979005 (Cited on 5)

[32] Hua Jiang and Yi Luo. 2017. Social media engagement for crisis communication. Social Media and Crisis Communication; Taylor & Francis: Abingdon, UK (2017), 401. (Cited on 3)

[33] Nathan Johnson, Benjamin Turnbull, Thomas Maher, and Martin Reisslein. 2020. Semantically modeling cyber influence campaigns (CICs): Ontology model and case studies. IEEE Access 9 (2020), 9365–9382. (Cited on 3)

[34] Aharon Kantorovich. 2013. An Evolutionary View of Science: Imitation and Memetics. http://philsci-archive.pitt.edu/9912/ Social Science Information. (Cited on 4)

[35] Stephen Karpman. 1968. Fairy tales and script drama analysis. Transactional analysis bulletin 7, 26 (1968), 39–43. (Cited on 4, 6)

[36] Georgiy Kasianov. 2022. The war over Ukrainian identity. Foreign Affairs (2022). (Cited on 4)

[37] Ivan Kozachenko. 2021. Transformed by contested digital spaces? Social media and Ukrainian diasporic ‘selves’ in the wake of the conflict with Russia. Nations and Nationalism 27, 2 (2021), 377–392. (Cited on 18)

[38] Poka Laenui. 2000. Processes of decolonization. Reclaiming Indigenous voice and vision (2000), 150–160. (Cited on 18)

[39] Benjamin Lee. 2020. ‘Neo-Nazis Have Stolen Our Memes’: Making Sense of Extreme Memes. Digital extremisms: Readings in violence, radicalisation and extremism in the online space (2020), 91–108. (Cited on 4)

[40] Yu-Hao Lee and Gary Hsieh. 2013. Does slacktivism hurt activism? The effects of moral balancing and consistency in online activism. In Proceedings of the SIGCHI conference on human factors in computing systems. 811–820. (Cited on 5, 18)

[41] Chen Ling, Ihab AbuHilal, Jeremy Blackburn, Emiliano De Cristofaro, Savvas Zannettou, and Gianluca Stringhini. 2021. Dissecting the meme magic: Understanding indicators of virality in image memes. Proceedings of the ACM on human-computer interaction 5, CSCW1 (2021), 1–24.(Cited on 5, 6, 7)

[42] Mai Skjott Linneberg and Steffen Korsgaard. 2019. Coding qualitative data: A synthesis guiding the novice. Qualitative research journal (2019). (Cited on 7)

[43] Mykola Makhortykh and Yehor Lyebyedyev. 2015. # SaveDonbassPeople: Twitter, propaganda, and conflict in Eastern Ukraine. The Communication Review 18, 4 (2015), 239–270. (Cited on 3)

[44] Mykola Makhortykh and Maryna Sydorova. 2017. Social media and visual framing of the conflict in Eastern Ukraine. Media, war & conflict 10, 3 (2017), 359–381. (Cited on 3)

[45] Ilan Manor and Elad Segev. 2015. America’s selfie: How the US portrays itself on its social media accounts. In Digital diplomacy. Routledge, 89–108. (Cited on 3)

[46] Alessandra Massa and Giuseppe Anzera. 2022. The platformization of military communication: The digital strategy of the Israel Defense Forces on Twitter. Media, War & Conflict (2022), 17506352221101257. (Cited on 4)

[47] Ally McCrow-Young and Mette Mortensen. 2021. Countering spectacles of fear: Anonymous’ meme ‘war’ against ISIS. European Journal of Cultural Studies 24, 4 (2021), 832–849. (Cited on 4)

[48] Joseph S Nye Jr. 2009. Smart power. New Perspectives Quarterly 26, 2 (2009), 7–9. (Cited on 3)

[49] Orestis Papakyriakopoulos, Severin Engelmann, and Amy Winecoff. 2023. Upvotes Downvotes? No Votes? Understanding the Relationship between Reaction Mechanisms and Political Discourse on Reddit. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems

(CHI ’23). Association for Computing Machinery, New York, NY, USA, Article 549, 28 pages. https://doi.org/10.1145/3544548.3580644 (Cited on 5)

[50] Lucy Pei, Benedict Salazar Olgado, and Roderic Crooks. 2022. Narrativity, Audience, Legitimacy: Data Practices of Community Organizers. In CHI Conference on Human Factors in Computing Systems Extended Abstracts. 1–6. (Cited on 18)

[51] Liudmyla Pidku˘ımukha and Nadiya Kiss. 2020. Battle of narratives: Political memes during the 2019 Ukrainian presidential election. Cognitive Studies 20 (2020). (Cited on 4)

[52] Stephen D Reese, Jr Gandy, and August E Grant. 2001. Prologue—Framing public life: A bridging model for media research. In Framing public life. Routledge, 23–48. (Cited on 4)

[53] Hannah Rose. 2022. Zelenskyy, ‘Denazification’ and the Redirection of Holocaust Victimhood. https://gnet-research.org/2022/04/07/zelenskyydenazification-and-the-redirection-of-holocaust victimhood/ (Cited on 4)

[54] Carol B Schwalbe and Shannon M Dougherty. 2015. Visual coverage of the 2006 Lebanon War: Framing conflict in three US news magazines. Media, war & conflict 8, 1 (2015), 141–162. (Cited on 4)

[55] Carol F Scott, Gabriela Marcu, Riana Elyse Anderson, Mark W Newman, and Sarita Schoenebeck. 2023. Trauma-Informed Social Media: Towards Solutions for Reducing and Healing Online Harm. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–20. (Cited on 5)

[56] Tatiana Serafin. 2022. Ukraine’s President Zelensky takes the Russia/Ukraine war viral. Orbis 66, 4 (2022), 460–476. (Cited on 3, 4)

[57] Fei Shen, Erkun Zhang, Hongzhong Zhang, Wujiong Ren, Quanxin Jia, and Yuan He. 2023. Examining the differences between human and bot social media accounts: A case study of the Russia-Ukraine War. First Monday (2023). (Cited on 4)

[58] Lisa Silvestri. 2016. Mortars and memes: Participating in pop culture from a war zone. Media, War & Conflict 9, 1 (2016), 27–42. (Cited on 4)

[59] Stefan Soesanto. 2022. The IT army of Ukraine: structure, tasking, and eco-system. CSS Cyberdefense Reports (2022). (Cited on 3)

[60] Oliviero Stock, Massimo Zancanaro, Chaya Koren, Cesare Rocchi, Zvi Eisikovits, Dina Goren Bar, Daniel Tomasini, and Patrice Weiss. 2008. A co-located interface for narration to support reconciliation in a conflict: initial results from Jewish and Palestinian youth. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 1583–1592. (Cited on 18)

[61] Peter Suciu. 2022. Is Russia’s Invasion Of Ukraine The First Social Media War https://www.forbes.com/sites/petersuciu/2022/03/01/is-russiasinvasion-of-ukraine-the-first-social media-war (Cited on 3)

[62] Bradley E Wiggins. 2016. Crimea River: Directionality in memes from the Russia-Ukraine conflict. International Journal of Communication 10 (2016), 35. (Cited on 4)

[63] Heather Suzanne Woods and Leslie A Hahner. 2019. Make America meme again: The rhetoric of the Alt-Right. Peter Lang New York. (Cited on 4)

[64] Kai-Cheng Yang, Emilio Ferrara, and Filippo Menczer. 2022. Botometer 101: Social bot practicum for computational social scientists. Journal of Computational Social Science 5, 2 (2022), 1511–1528. (Cited on 4)

[65] Jinyi Ye, Nikhil Jindal, Francesco Pierri, and Luca Luceri. 2023. Online Networks of Support in Distressed Environments: Solidarity and Mobilization during the Russian Invasion of Ukraine. arXiv preprint arXiv:2304.04327 (2023). (Cited on 17)

[66] Olesia Yehorova, Antonina Prokopenko, and Anna Zinchenko. 2023. Towards a typology of humorous wartime tweets: the case of Ukraine 2022. The European Journal of Humour Research 11, 1 (2023), 1–26. (Cited on 4)

[67] Eline Zenner and Dirk Geeraerts. 2018. One does not simply process memes: Image macros as multimodal constructions. Cultures and traditions of wordplay and wordplay research (2018), 167 194. (Cited on 4)

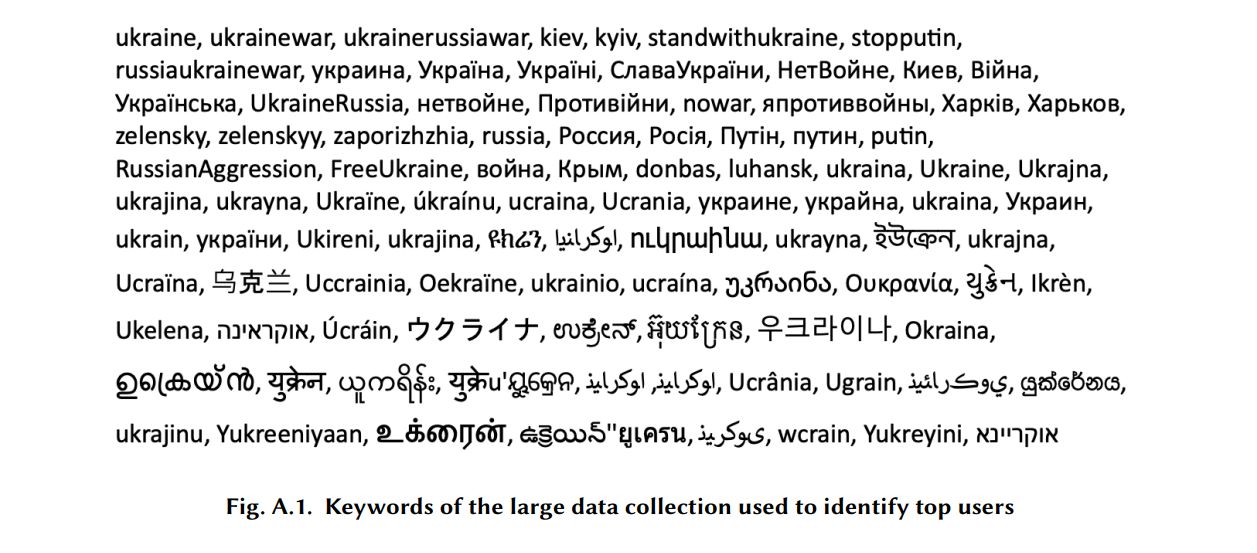

A ORIGINAL DATA COLLECTION KEYWORDS

The first data collection was performed using the Twitter Streaming API using keywords determined using snowball sampling during the first week of the invasion. The keyword “Ukraine” was translated into some of the most used languages and alphabets, as can be seen in Figure A.1.

B ANNOTATION CODEBOOK

(1) Number of panels.

• Single panel: images that are composed of only one image

• Multiple panels: images/posts that are composed of a series of images

(2) Type of image.

• Photo: a picture taken by a camera

• Screenshot: an image of a screenshot taken from a computer screen

• Illustration: a drawing, painting, or printed work of art

(3) Scale.

• Close-up: a shot that tightly frames a person or object, such as the subject’s face taking up the whole frame

• Medium shot: a shot that shows equality between subjects and background, such as when the shot is “cutting the person in half”

• Long shot: a shot where the subject is no longer identifiable and the focus is on the larges scene rather than on one subject

• Other: there is no clear subject or background

(4) Type of subject.

• Object: refers to a material thing that can be seen and touched, like a table, a bottle, a building, or even a celestial body

• Character: refers to people or anthropomorphized creatures/objects, such as cartoon characters

• Scene: when the situation or activity depicted in an image is its main focus, instead of it being on the single characters or objects depicted in it

• Creature: refers to an animal that is not anthropomorphized

• Text: text is the focus of the image.

• Other: anything else, e.g. posters or infographics

(5) Attributes of the subject. For images whose subject is one or more characters, we consider whether the image’s visual attraction lies with the character’s facial expression or with their posture. For the other attributes, we identify five features.

• Facial expression: only for a character

• Posture: only for a character

• Poster: informative large scale image including both textual and graphic elements. There are also posters only with either of these two elements. Posters are generally designed to be displayed at a long distance. It informs or instructs the viewer through text, symbols, graph, or a combination of these.

(6) Contains words. Whether words are a part of the image, including superimposed on top of an existing image.

(7) Character face/posture emotion.

• Positive, Negative, or Neutral: only for a character annotated as facial expression

(8) Narrative (possible more than one).

• Hero (benevolent, strong)

• Victim (benevolent, weak)

• Villain (malevolent, strong)

• Fool (malevolent, weak)

• Other

• None

(9) Emotional appeal. [Open label]

• Humor: satirial, sarcastic, or funny

• Fear: threats, harms, or calamities

• Outrage: scandals, corruption, or moral hazards

• Pride: objects/scenes of adoration or affection

• Compassion: sympathy and sorrow for another who is stricken by misfortune

• None

(10) Intent. [Open label]

• Informational

• Call to action

• Other

(11) Actor. [Open label]

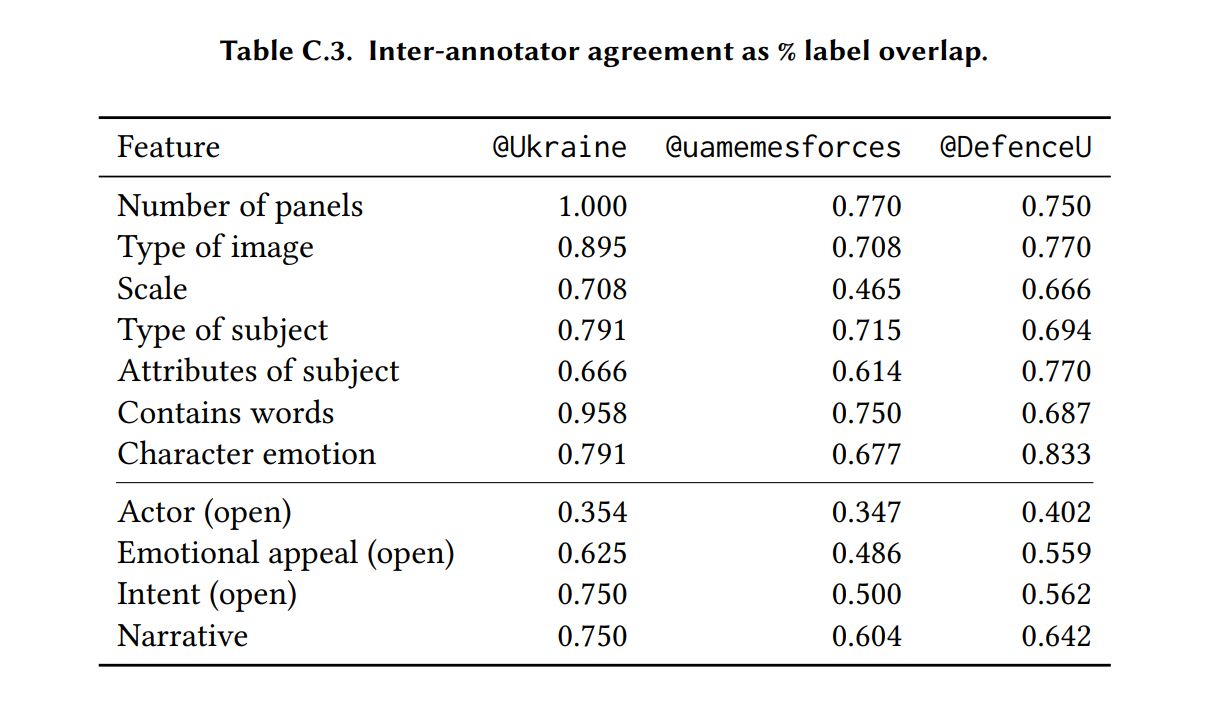

C INTER-ANNOTATOR AGREEMENT

Table C.3 shows inter-annotator agreement between the authors for annotation of the data described in Methods. In aggregate, we find the labelers to be mostly in agreement, and the least of all for the open label of Actor (which was later cleaned and aggregated).

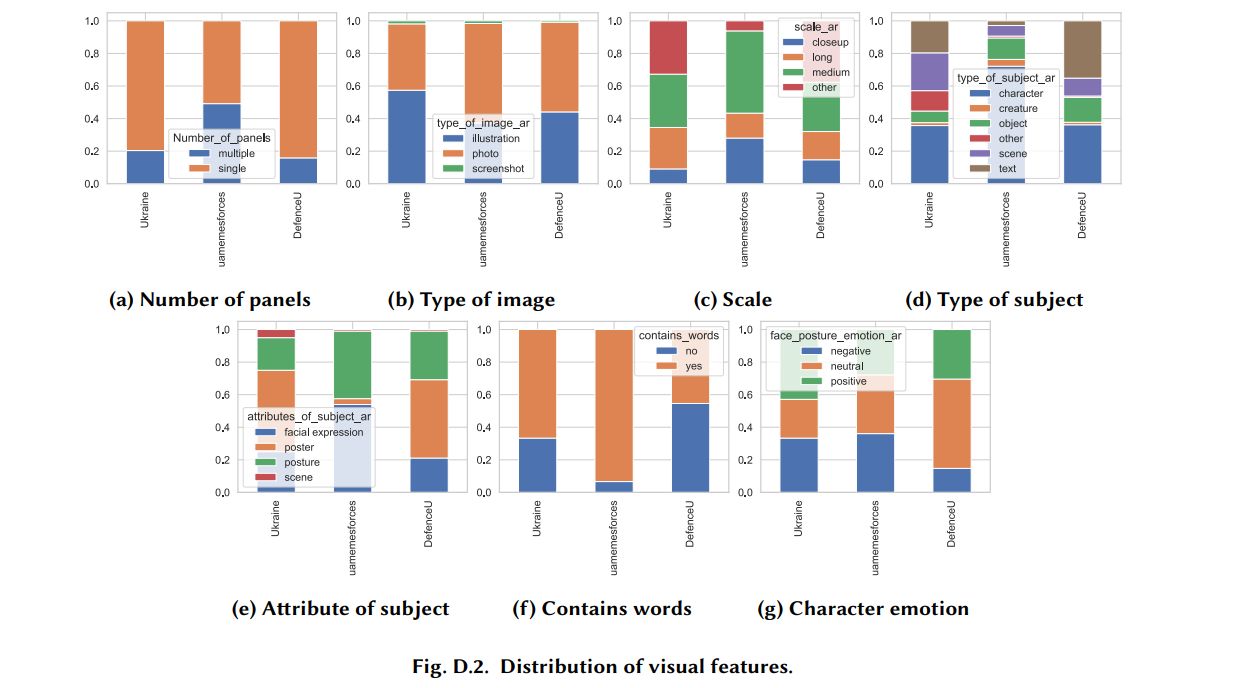

D VISUAL CONTENT FEATURES

Figure D.2 contains summary statistics of content visual features. The content produced by the three accounts has markedly different features. The @uamemesforces tends to publish content spanning both multiple and single panels,

have mostly a medium scale, and have a character present, for which both posture and facial expression may be important, and which vast majority of the time contains words. @Ukraine and @DefenceU accounts are more similar to each other, in the way that most of their content has a single panel, it uses more illustrations/posters, which contain text. @DefenceU is especially more likely to have content without words and with neutral emotive expressions.

This paper is available on arxiv under CC 4.0 license.