Authors:

(1) PIOTR MIROWSKI and KORY W. MATHEWSON, DeepMind, United Kingdom and Both authors contributed equally to this research;

(2) JAYLEN PITTMAN, Stanford University, USA and Work done while at DeepMind;

(3) RICHARD EVANS, DeepMind, United Kingdom.

Table of Links

Storytelling, The Shape of Stories, and Log Lines

The Use of Large Language Models for Creative Text Generation

Evaluating Text Generated by Large Language Models

Conclusions, Acknowledgements, and References

A. RELATED WORK ON AUTOMATED STORY GENERATION AND CONTROLLABLE STORY GENERATION

B. ADDITIONAL DISCUSSION FROM PLAYS BY BOTS CREATIVE TEAM

C. DETAILS OF QUANTITATIVE OBSERVATIONS

E. FULL PROMPT PREFIXES FOR DRAMATRON

F. RAW OUTPUT GENERATED BY DRAMATRON

6 PARTICIPANT SURVEYS

6.1 Qualitative and Quantitative Results on Participant Surveys

Of the total 15 study participants, 13 provided responses on our post-session feedback form. The form gave participants the following instruction: “When answering these questions, please reflect on the interactive co-authorship session as well as considering the use of an interactive AI system like Dramatron in the future”, and asked nine questions. Each question could be answered using a Likert-type scale ranging from Strongly Disagree 1 to 5 Strongly Agree. These questions are slightly adapted from Yuan et al. [122] and Stevenson et al. [104]: (1) I found the AI system helpful, (2) I felt like I was collaborating with the AI system, (3) I found it easy to write with the AI system, (4) I enjoyed writing with the AI system, (5) I was able to express my creative goals while writing with the AI system, (6) The script(s) written with the AI system feel unique, (7) I feel I have ownership over the created script(s), (8) I was surprised by the responses from the AI system, and (9) I’m proud of the final outputs. We also asked five free-form questions. Two questions aimed at assessing the participants’ exposure to AI writing tools (In a few words: what is your experience in using AI tools for writing for theatre of film or during performance on stage?) and their industry experience (In a few words: what is your experience in theatre or film/TV?). We used these questions to manually define a binary indicator variable (Has experience of AI writing tools) and a three-class category for their primary domain of expertise (Improvisation, Scripted Theatre and Film/TV). Three more questions gave participants an opportunity to provide developmental feedback about the system: What is one thing that the AI system did well?, What is one improvement for the AI system? and Please provide any comments, reflections, or open questions that came up for you during the co-authorship session or when answering this survey.

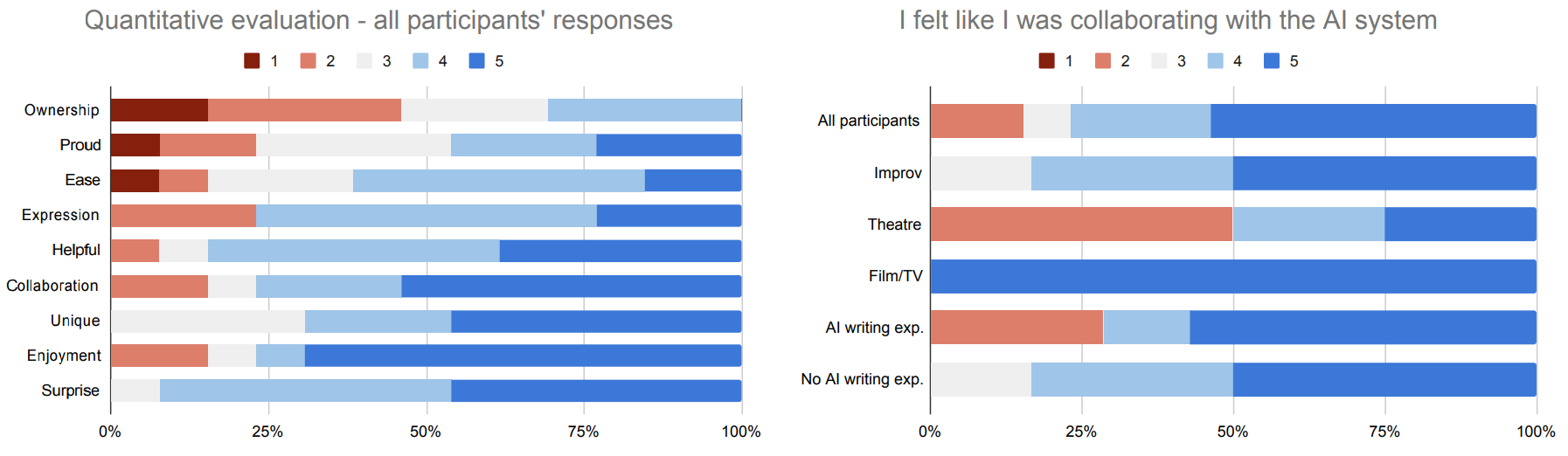

Aggregated responses are plotted on Figure 5 (Left), and (Right) factors responses to the question on collaboration by industry background and experience (see Figure 7 for more). The results are summarized below.

6.1.1 AI-generated scripts can be surprising, enjoyable, and unique. The statements that received the strongest positive response were I was surprised by the responses from the AI system (92% participants agreed, 46% strongly agreed) followed by I enjoyed writing with the AI system (77% agreed, 69% strongly agreed) and The script(s) written with the AI system feel unique (69% agreed, 46% strongly agreed), referring to lines of dialogue or connections between characters.

6.1.2 Dramatron can be helpful for expressing creative goals for screenwriting. Positive responses to statements I felt like I was collaborating with the AI system, (77% agreed, 54% strongly agreed), I found the AI system helpful (84% agreed, 38% strongly agreed) and I was able to express my creative goals with the AI system (77% agreed, 23% strongly agreed) suggest a fruitful interaction between a writer and an interactive AI writing tool, in particular for ideation, through multiple choices, twists to the narrative, or specific details.

6.1.3 Dramatron outputs were preferred for screenplays over theatre scripts. We noticed that the subgroup of respondents with a film or TV background gave the highest scores for the surprise, enjoyment, collaboration, helpful, expression, ease and ownership questions. Respondents with theatre background judged enjoyment, collaboration, helpful, creative, expression, ease, proud and ownership the least. This reinforces our observations during interviews (see the criticism of log line-based conditioning for generating theatre scripts in Section 5.6).

6.1.4 Dramatron’s hierarchical prompting interface is moderately easy to use. Participants were more split about the ease of use of the tool (61% agreed) and whether they felt proud about the output of the system (46% agreed), with one participant strongly disagreeing. We note that these two answers were highly correlated (𝑟 = 0.9), and that several questions had a relatively strong correlation (𝑟 ≥ 0.66): helpful, collaboration, ease, enjoyment, expression and ease. As observed during interviews, recurrent criticism was about Dramatron needing detailed log lines to start the generation.

6.1.5 Participants felt a low level of ownership for the co-written scripts. More than half of respondents felt they did not own the result of the AI system. One participant commented that the generated script was only a starting point provided by the AI and that the writer still needed to create their own script. One interpretation is that Dramatron is seen by the industry experts not as much as a full script writing tool but rather as a learning tool to practice writing and a source of inspiration that generates “not necessarily completed works so much as provocations” (p7).

6.2 Quantitative Observations

We observed that participants would skim the text and remark on specific inspiring lines, but were less focused on the overall coherence of the discourse. That said, participants did notice if the dialogue of a scene was unrelated to that scene’s beat or log line, and most noticed when the generation had excessive repetition. Thus, we pair our qualitative results with a small set of descriptive statistics measuring these features and relevant to our participants’ feedback.

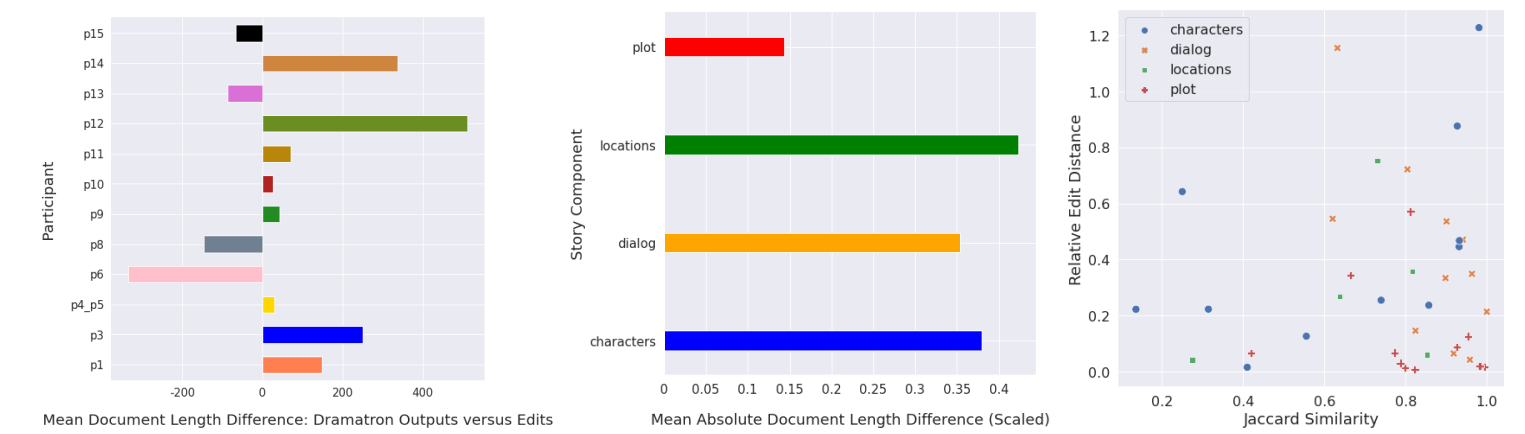

Lemma-based Jaccard similarity is a metric measuring the overlap between two sets of lemmatised vocabularies. We found that when participants made multiple generations for a scene description, they generally did not choose outputs with greatest Jaccard similarity. We performed a one-sided Wilcox signed-rank test on Jaccard similarity score differences, testing whether chosen output seeds were more similar to the log line provided by participants. We found that the beats for chosen generations are not more similar to the log line when compared to the beats for generations not chosen (W = 28, p = 0.926). In fact, there is a strong trend in the opposite direction. This suggests that word overlap is not predictive of which output generation is selected.

Using an open-source repetition scorer [123], we examined the repetition scores for texts where participants generated multiple dialogue outputs but chose only one to incorporate into the script (𝑛 = 3). After computing a one-sided Wilcox signed-rank test, we found no significant difference between the repetition scores for chosen Dramatron output against alternative seed outputs (W = 57, p = 0.467). This finding aligns with our observations in the interactive sessions where participants tended to delete degenerate repetitions, or as one participant (p13) put it: "[Dramatron] puts meat on the bones... And then you trim the fat by going back and forth." We interpret our distance measures as indicators of engagement with Dramatron. Figure 6 (right) displays mean relative Levenshtein distances for each participant, along with Jaccard similarity.

We also report document length differences, to show the directionality of editing (i.e. whether participants are adding or deleting material to or from Dramatron output. Figure 6 (left) shows the mean document length difference by participant. Figure 6 (middle) shows these measures normalised and grouped by type of generation. We notice that four participants tended to add to generated outputs (three of them, p6, p13, and p15 expressed interest in staging the resulting scripts) while eight others tended to remove from and curate the outputs (including p1 who staged productions). We also notice that the plot outline was the least edited part of the script. During co-authorship we observed that participants tended to delete longer location descriptions and parts of dialogue (especially loops), and rewrite the character descriptions.

This paper is available on arxiv under CC 4.0 license.