Authors:

(1) Muzhaffar Hazman, University of Galway, Ireland;

(2) Susan McKeever, Technological University Dublin, Ireland;

(3) Josephine Griffith, University of Galway, Ireland.

Table of Links

Conclusion, Acknowledgments, and References

A Hyperparameters and Settings

E Contingency Table: Baseline vs. Text-STILT

B Metric: Weighted F1-Score

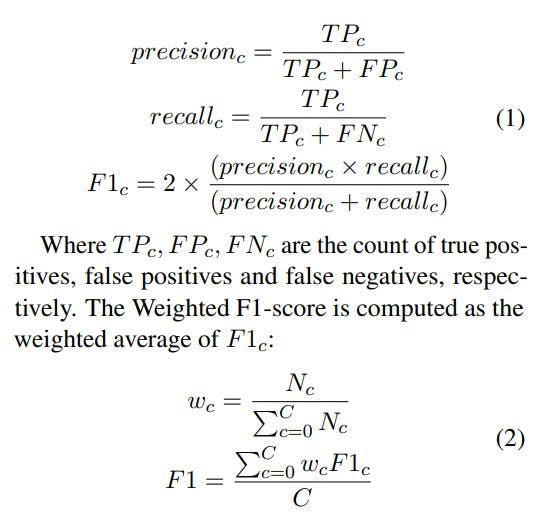

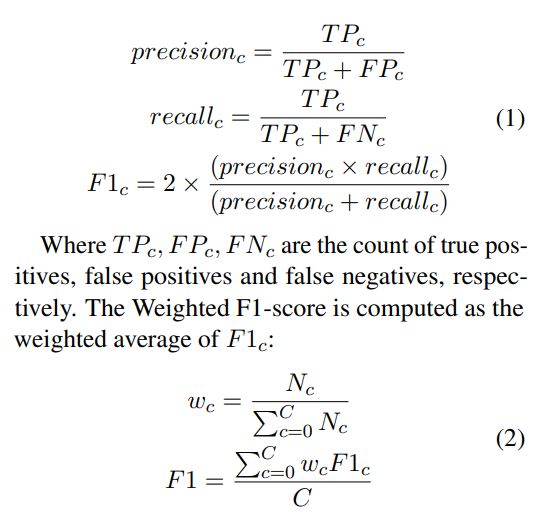

The performance of our models are measured by Weighted F1-Score, inline with the reporting set by the authors of the Memotion 2.0 dataset (Patwa et al., 2022). The F1-Score is the harmonic mean of precision and recall, equally representing both. “Weighted” here denotes that the F1-score is first computed per-class and then averaged while weighted by the proportion of occurrences of each class in the ground truth labels. We compute this using PyTorch’s implementation multiclass f1 score. Class-wise F1-scores, F1c where c ∈ [1, 2, 3], are computed as:

Where Nc is the number of samples with the ground truth label c in the testing set. The Weighted F1 is often used when the classes are imbalanced – the training, validation and testing sets of Memotion 2.0 show significant and varying class imblance – as it takes into account the relative importance of each class. Note that this weighted averaging could result in an F1-score that is not between the Precision and Recall scores.

This paper is available on arxiv under CC 4.0 license.