Authors:

(1) Muzhaffar Hazman, University of Galway, Ireland;

(2) Susan McKeever, Technological University Dublin, Ireland;

(3) Josephine Griffith, University of Galway, Ireland.

Table of Links

Conclusion, Acknowledgments, and References

A Hyperparameters and Settings

E Contingency Table: Baseline vs. Text-STILT

2 Related Works

2.1 Meme Affective Classifiers

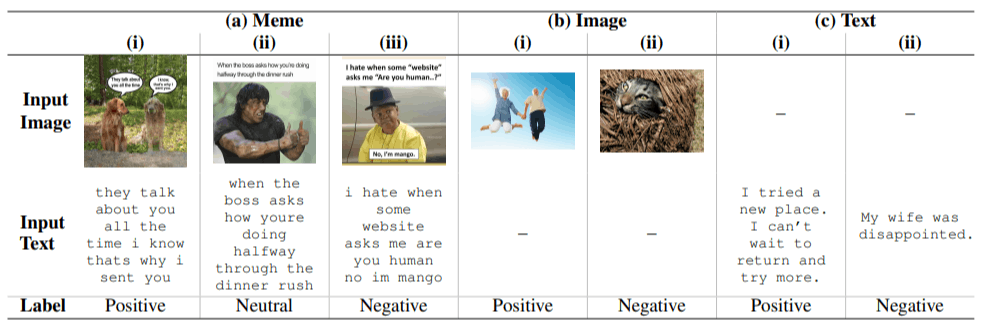

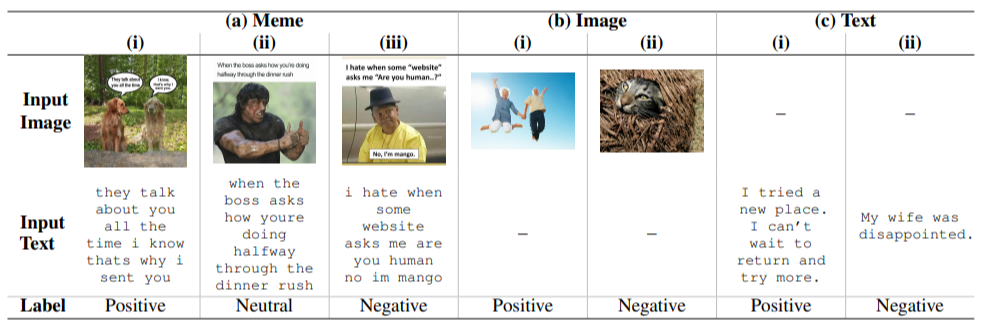

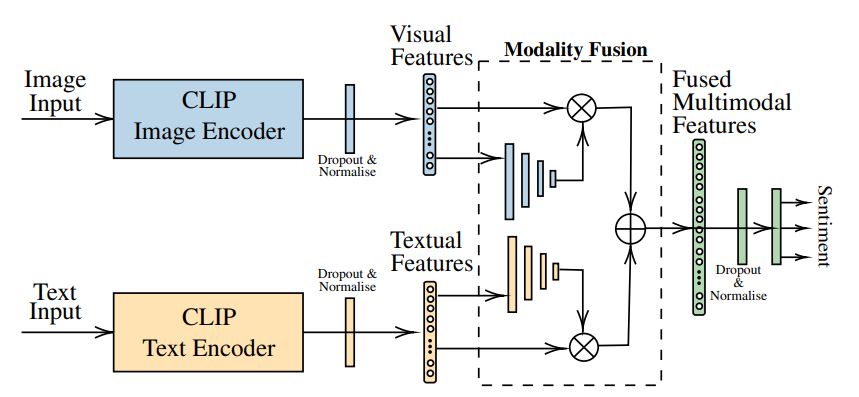

Meme sentiment classifiers fall within the broader category of meme affective classifiers, which can be defined as multimodal deep learning models trained to classify memes by a given affect dimension, including sentiment polarity, offensiveness, motivationality, sarcasm (Sharma et al.,2020; Patwa et al., 2022; Mishra et al., 2023), hate speech (Kiela et al., 2020), and trolling behaviour (Suryawanshi et al., 2020). Based on the majority of state-of-the-art meme classifiers, the current literature suggests that these different tasks do not require architecturally distinct solutions (Hazman et al., 2023). Broadly, two general architectural approaches exist among multimodal meme affective classifiers: first, multi-encoder models that use multiple pretrained unimodal encoders which are then fused prior to classification – numerous examples are summarised by Sharma et al. (2020) and Patwa et al. (2022). These models use both a text encoder and an image encoder that were each trained in unimodal self-supervised and unsupervised tasks such as BERT or SentenceTransformer for text, and VGG-19 or RESNET50 for images. In contrast, single-encoder models are based on a pretrained multimodal vision-and-language model, most often a transformer that has been pretrained on multimodal tasks and accepts both modalities as a single input. The single-encoder approach (Muennighoff, 2020; Zhu, 2020) reuses models that have been pretrained on multimodal tasks such as VLBERT, UNITER, ERNIE-ViL, DeVLBERT and VisualBERT. There is little empirical evidence to show that one architectural approach consistently outperforms the other in the various meme classification tasks.

Typically, both multi- and single-encoder architectures use transfer learning by finetuning pretrained models on a dataset of labelled memes. While pretraining is often assumed to yield performance benefits for meme classification tasks, this has not been exhaustively proven, especially when viewed relative to studies in image- and textonly tasks (Jiang et al., 2022). Multimodally pretrained baseline models for the Hateful Memes dataset (Kiela et al., 2020) outperformed their unimodally pretrained counterparts. Suryawanshi et al. (2020) showed that the use of pretrained weights did not consistently provide performance benefits to their image-only classifiers of trolling behaviour in Tamil code-mixed memes. Although the use of pretrained encoders is common amongst meme sentiment classifiers (Sharma et al., 2022; Bucur et al., 2022; Pramanick et al., 2021a; Sharma et al., 2020; Patwa et al., 2022), there is little evidence as to whether pretrained representations are suitable for the downstream task or if an encoder’s performance in classifying unimodal input transfers to classifying multimodal memes.

Beyond using pretrained image and text encoders, several recent works have attempted to incorporate external knowledge into meme classifiers. Some employed additional encoders to augment the image modality representation such as human faces (Zhu, 2020; Hazman et al., 2023), while others have incorporated image attributes (including entity recognition via a large knowledge base) (Pramanick et al., 2021b), cross-modal positional information (Shang et al., 2021; Hazman et al., 2023), social media interactions (Shang et al., 2021), and image captioning (Blaier et al., 2021). To our knowledge, no published attempts have been made to directly incorporate unimodal sentiment analysis data into a multimodal meme classifier.

2.2 Supplementary Training of Meme Classifiers

Several recent works addressed the lack of labelled multimodal memes by incorporating additional non-meme data. Sharma et al. (2022) presents two self-supervised representation learning approaches to learn the “semantically rich cross-modal feature[s]” needed in various meme affective classification tasks. They finetuned an image and a text encoder on image-with-caption tweets before fitting these representations on to several multimodal meme classification tasks including sentiment, sarcasm, humour, offence, motivationality, and hate speech. These approaches showed performance improvement on some, but not all, tasks. In some cases, their approach underperfomed in comparison to the more typical supervised finetuning approaches. Crucially, since the authors did not compare their performance to that of the same architecture without the self-supervised step, isolating performance gains directly attributable to this step is challenging. Furthermore, while the authors reported multiple tasks where their approach performed best while training on only 1% of the available memes, their included training curves imply that these performance figures were selected at the point of maximum performance on the testing set during training. This differs from the typical approach of early stopping based on performance on a separately defined validation set, which hinders direct comparisons to competing solutions.

Bucur et al. (2022) proposed a multitask learning approach that simultaneously trained a classifier on different meme classification tasks – sentiment, sarcasm, humour, offence, motivationality – for the same meme inputs. Their results showed that multitask learning underperformed in the binary detection of humour, sarcasm, and offensiveness. This approach was found to be only effective in predicting the intensity of sarcasm and offensiveness of a meme. However, in sentiment classification, this multitask approach showed inconsistent results. Although multitask learning did not improve the performance of their text-only classifier, their multitask multimodal classifier offers the best reported results on the Memotion 2.0 sentiment classification task to date.

To the best of our knowledge, only one previous work used unimodal inputs to supplement training of multimodal meme classifiers. Suryawanshi et al.’s (2020) initial benchmarking of the TamilMemes dataset showed that the inclusion of unimodal images improved the performance of their ResNet-based image-only model in detecting trolling behaviour in Tamil memes. The authors augmented their dataset of memes with images collected from Flickr; by assigning these images as not containing trolling language. They found that this augmentation with 1,000 non-meme images decreased the performance of their classifier. With 30,000 images, their classifier performed identically to one that only used pretrained weights and supervised training on memes; both were outperformed by their model that did not use either pretrained weights or data augmentations.

Existing supplementary approaches to improve meme classification performance have shown mixed results. Notably, the observations made in these works were measured only once and were not accompanied by statistical significance tests, necessitating caution when drawing conclusions on their effectiveness.

This paper is available on arxiv under CC 4.0 license.