Authors:

(1) Rui Cao, Singapore Management University;

(2) Ming Shan Hee, Singapore University of Design and Technology;

(3) Adriel Kuek, DSO National Laboratories;

(4) Wen-Haw Chong, Singapore Management University;

(5) Roy Ka-Wei Lee, Singapore University of Design and Technology

(6) Jing Jiang, Singapore Management University.

Table of Links

6 CONCLUSION

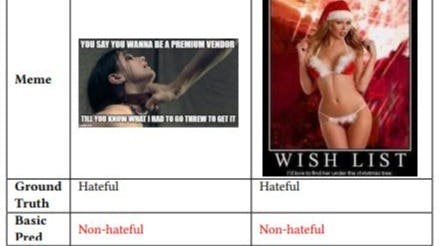

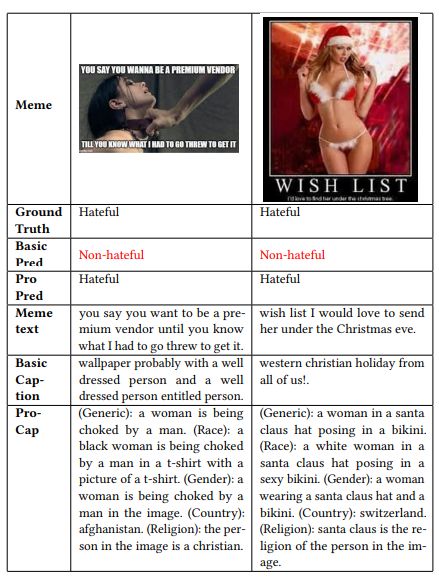

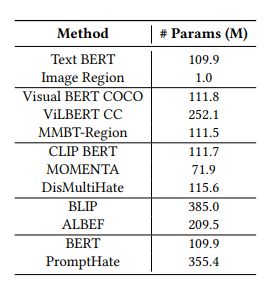

In this study, we attempt to leverage pre-trained vision-language models (PVLMs) in a low-computation-cost manner to aid the task of hateful meme detection. Specifically, without any fine-tuning of PVLMs, we probe them in a zero-shot VQA manner to generate hateful content-centric image captions. With the distilled knowledge from large PVLMs, we observe that a simple language model, BERT, can surpass all multimodal pre-trained BERT models of a similar scale. PromptHate with probe-captioning outperforms previous results significantly and achieves the new state-of-the art on three benchmarks.

Limitations: We would like to point out a few limitations of the proposed method, suggesting potential future directions. Firstly, we heuristically use answers to all probing questions as Pro-Cap, even though some questions may be irrelevant to the meme target. We report the performance of PromptHate with the answer from one probing question in Appendix D, highlighting that using all questions may not be the optimal solution. A future direction could involve training a model to dynamically select probing questions that are most relevant for meme detection. Secondly, although we demonstrate the effectiveness of Pro-Cap through performance and a case study in this paper, more thorough analysis is needed. For instance, in the future, we could use a gradient-based interpretation approach [31] to examine how different probing questions influence the final results, thereby enhancing the interpretation of the models.

REFERENCES

[1] Jean-Baptiste Alayrac, Jeff Donahue, Pauline Luc, Antoine Miech, Iain Barr, Yana Hasson, Karel Lenc, Arthur Mensch, Katie Millican, Malcolm Reynolds, Roman Ring, Eliza Rutherford, Serkan Cabi, Tengda Han, Zhitao Gong, Sina Samangooei, Marianne Monteiro, Jacob Menick, Sebastian Borgeaud, Andrew Brock, Aida Nematzadeh, Sahand Sharifzadeh, Mikolaj Binkowski, Ricardo Barreira, Oriol Vinyals, Andrew Zisserman, and Karen Simonyan. 2022. Flamingo: a Visual Language Model for Few-Shot Learning. CoRR (2022). arXiv:2204.14198

[2] Rui Cao, Roy Ka-Wei Lee, Wen-Haw Chong, and Jing Jiang. 2022. Prompting for Multimodal Hateful Meme Classification. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, EMNLP. 321–332.

[3] Wenliang Dai, Zihan Liu, Ziwei Ji, Dan Su, and Pascale Fung. 2023. Plausible May Not Be Faithful: Probing Object Hallucination in Vision-Language Pre-training. In Proceedings of the 17th Conference of the European Chapter of the Association for Computational Linguistics EACL. 2136–2148.

[4] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT. 4171–4186.

[5] Elisabetta Fersini, Francesca Gasparini, Giulia Rizzi, Aurora Saibene, Berta Chulvi, Paolo Rosso, Alyssa Lees, and Jeffrey Sorensen. 2022. SemEval-2022 Task 5: Multimedia Automatic MisogynyIdentification. In Proceedings of the 16th International Workshop on Semantic Evaluation, SemEval@NAACL. 533–549.

[6] Tianyu Gao, Adam Fisch, and Danqi Chen. 2021. Making Pre-trained Language Models Better Few-shot Learners. In Proceedings of the Annual Meeting of the Association for Computational Linguistics and the International Joint Conference on Natural Language Processing, ACL/IJCNLP. 3816–3830.

[7] Yash Goyal, Tejas Khot, Douglas Summers-Stay, Dhruv Batra, and Devi Parikh. 2017. Making the V in VQA Matter: Elevating the Role of Image Understanding in Visual Question Answering. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR. 6325–6334.

[8] Ming Shan Hee, Wen-Haw Chong, and Roy Ka-Wei Lee. 2023. Decoding the Underlying Meaning of Multimodal Hateful Memes. arXiv preprint arXiv:2305.17678 (2023).

[9] Ming Shan Hee, Roy Ka-Wei Lee, and Wen-Haw Chong. 2022. On explaining multimodal hateful meme detection models. In Proceedings of the ACM Web Conference 2022. 3651–3655.

[10] Drew A. Hudson and Christopher D. Manning. 2019. GQA: A New Dataset for Real-World Visual Reasoning and Compositional Question Answering. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR. 6700–6709.

[11] Douwe Kiela, Suvrat Bhooshan, Hamed Firooz, and Davide Testuggine. 2019. Supervised Multimodal Bitransformers for Classifying Images and Text. In Visually

Grounded Interaction and Language (ViGIL), NeurIPS Workshop.

[12] Douwe Kiela, Hamed Firooz, Aravind Mohan, Vedanuj Goswami, Amanpreet Singh, Pratik Ringshia, and Davide Testuggine. 2020. The Hateful Memes Challenge: Detecting Hate Speech in Multimodal Memes. In Advances in Neural Information Processing Systems, NeurIPS.

[13] Gokul Karthik Kumar and Karthik Nandakumar. 2022. Hate-CLIPper: Multimodal Hateful Meme Classification based on Cross-modal Interaction of CLIP Features. CoRR abs/2210.05916 (2022).

[14] Roy Ka-Wei Lee, Rui Cao, Ziqing Fan, Jing Jiang, and Wen-Haw Chong. 2021. Disentangling Hate in Online Memes. In MM ’21: ACM Multimedia Conference. 5138–5147.

[15] Junnan Li, Dongxu Li, Silvio Savarese, and Steven C. H. Hoi. 2023. BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models. CoRR (2023). arXiv:2301.12597

[16] Junnan Li, Dongxu Li, Caiming Xiong, and Steven C. H. Hoi. 2022. BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation. In International Conference on Machine Learning, ICML, Vol. 162. 12888–12900.

[17] Junnan Li, Ramprasaath R. Selvaraju, Akhilesh Deepak Gotmare, Shafiq R. Joty, Caiming Xiong, and Steven C. H. Hoi. 2021. Align before Fuse: Vision and Language Representation Learning with Momentum Distillation. CoRR (2021). arXiv:2107.07651

[18] Liunian Harold Li, Mark Yatskar, Da Yin, Cho-Jui Hsieh, and Kai-Wei Chang. 2019. Visualbert: A simple and performant baseline for vision and language. CoRR (2019). arXiv:1908.03557

[19] Tsung-Yi Lin, Michael Maire, Serge J. Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C. Lawrence Zitnick. 2014. Microsoft COCO: Common Objects in Context. In Computer Vision - ECCV European Conference, Vol. 8693. 740–755.

[20] Phillip Lippe, Nithin Holla, Shantanu Chandra, Santhosh Rajamanickam, Georgios Antoniou, Ekaterina Shutova, and Helen Yannakoudakis. 2020. A Multimodal Framework for the Detection of Hateful Memes. arXiv preprint arXiv:2012.12871 (2020).

[21] Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. 2019. RoBERTa: A Robustly Optimized BERT Pretraining Approach. CoRR (2019). arXiv:1907.11692

[22] Ilya Loshchilov and Frank Hutter. 2017. Fixing weight decay regularization in adam. CoRR (2017). arXiv:1711.05101

[23] Jiasen Lu, Dhruv Batra, Devi Parikh, and Stefan Lee. 2019. Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. CoRR (2019). arXiv:1908.02265

[24] Lambert Mathias, Shaoliang Nie, Aida Mostafazadeh Davani, Douwe Kiela, Vinodkumar Prabhakaran, Bertie Vidgen, and Zeerak Waseem. 2021. Findings of the WOAH 5 Shared Task on Fine Grained Hateful Memes Detection. In Proceedings of the 5th Workshop on Online Abuse and Harms (WOAH 2021). 201–206.

[25] Ron Mokady, Amir Hertz, and Amit H. Bermano. 2021. ClipCap: CLIP Prefix for Image Captioning. CoRR (2021). arXiv:2111.09734

[26] Niklas Muennighoff. 2020. Vilio: State-of-the-art Visio-Linguistic Models applied to Hateful Memes. CoRR (2020). arXiv:2012.07788

[27] Shraman Pramanick, Dimitar Dimitrov, Rituparna Mukherjee, Shivam Sharma, Md. Shad Akhtar, Preslav Nakov, and Tanmoy Chakraborty. 2021. Detecting Harmful Memes and Their Targets. In Findings of the Association for Computational Linguistics: ACL/IJCNLP. 2783–2796.

[28] Shraman Pramanick, Shivam Sharma, Dimitar Dimitrov, Md. Shad Akhtar, Preslav Nakov, and Tanmoy Chakraborty. 2021. MOMENTA: A Multimodal Framework for Detecting Harmful Memes and Their Targets. In Findings of the Association for Computational Linguistics: EMNLP. 4439–4455.

[29] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. 2021. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the International Conference on Machine Learning, ICML, Vol. 139. 8748–8763.

[30] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. 2016. Faster R-CNN: towards real-time object detection with region proposal networks. IEEE transactions on pattern analysis and machine intelligence 39, 6 (2016), 1137–1149.

[31] Ramprasaath R. Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, and Dhruv Batra. 2017. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In IEEE International Conference on Computer Vision, ICCV. 618–626.

[32] Piyush Sharma, Nan Ding, Sebastian Goodman, and Radu Soricut. 2018. Conceptual Captions: A Cleaned, Hypernymed, Image Alt-text Dataset For Automatic Image Captioning. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, ACL. 2556–2565.

[33] Hugo Touvron, Thibaut Lavril, Gautier Izacard, Xavier Martinet, Marie-Anne Lachaux, Timothée Lacroix, Baptiste Rozière, Naman Goyal, Eric Hambro, Faisal Azhar, Aurélien Rodriguez, Armand Joulin, Edouard Grave, and Guillaume Lample. 2023. LLaMA: Open and Efficient Foundation Language Models. CoRR (2023). arXiv:2302.13971

[34] Riza Velioglu and Jewgeni Rose. 2020. Detecting Hate Speech in Memes Using Multimodal Deep Learning Approaches: Prize-winning solution to Hateful Memes Challenge. CoRR (2020). arXiv:2012.12975

[35] Yi Zhou and Zhenhao Chen. 2020. Multimodal Learning for Hateful Memes Detection. arXiv preprint arXiv:2011.12870 (2020).

[36] Jiawen Zhu, Roy Ka-Wei Lee, and Wen Haw Chong. 2022. Multimodal zero-shot hateful meme detection. In Proceedings of the 14th ACM Web Science Conference 2022. 382–389.

[37] Ron Zhu. 2020. Enhance Multimodal Transformer With External Label And In-Domain Pretrain: Hateful Meme Challenge Winning Solution. CoRR (2020). arXiv:2012.08290

This paper is available on arxiv under CC 4.0 license.